Most of you will – hopefully – have some index maintenance strategy. That is, you need some criteria, when to rebuild or reorganize an index. I will not repeat every advice that you may find elsewhere on the internet, e.g. here, or here.

In almost all given advices the dynamic management view sys.dm_db_index_physical_stats plays a central role, since it can easily be used to detect the degree of fragmentation of an index and the course of action to perform. Generally spoken, if index fragmentation exceeds a distinct value (let’s say 40%), you should perform a complete rebuild of the index. By doing so, the whole index tree is built from scratch and also the index related statistics is rebuilt. If the fragmentation is noticeable, but not too high (somewhere between 10% and 40%), an index reorganize may be sufficient. By performing a reorganize only the index pages in the leaf level are rearranged which is less cost (I/O) intensive than a complete index rebuild.

Unfortunately, sys.dm_db_index_physical_stats can create huge I/O stress, as you can check inside this blog post of Paul Randal (yes, the guru). There is, of course, an opportunity to affect the I/O load created, by specifying the last parameter of the sys.dm_db_index_physical_stats DMF. IF you use LIMITED here, the I/O load is as minimal as possible. DETAILED, on the other hand, may create very noticeable physical I/O, especially for larger indexes, although it should reveal very detailed and realistic.

But be aware that querying the fragmentation by the use of sys.dm_db_index_physical_stats, as also suggested in Books Online (see here), may not be sufficient to detect candidates for necessary rebuilds.

Look at the following example.

We start by creating a simple table and inserting 400,000 rows:

| create table t1

(

c1 uniqueidentifier not null default newsequentialid() primary key

,c2 nchar(513) not null default '#'

)

go

-- Insert 400,000 rows

insert t1(c2)

select top 400000 '?' from sys.trace_event_bindings c1,sys.trace_event_bindings as c2

go |

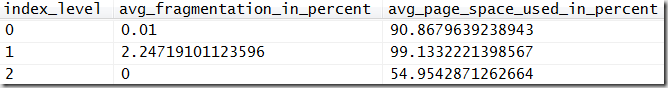

Now, let’s see the fragmentation of the primary key (which is also the clustered key in our case). Oh, btw.: I know very well that a clustered index on a GUID column is less than optimal, but I see this practice all the time. It’s just tat developers love the idea of creating objects (table rows) that are unique throughout the whole universe. But that’s not the point here, so let’s see how the fragmentation as well as the space used values look like. First, we use the LIMITED mode:

| select index_level, avg_fragmentation_in_percent, avg_page_space_used_in_percent

from sys.dm_db_index_physical_stats(db_id(),object_id('t1'),null,null,'limited') |

Here’s the result:

Looks pretty good, he? 0.7% fragmentation, so no need for any actions, right? (avg_space_used_in_percent is not shown in LIMITED mode.) Well, let’s see what sys.dm_db_index_physical_stats returns when running in DETAILED mode:

| select index_level, avg_fragmentation_in_percent, avg_page_space_used_in_percent

from sys.dm_db_index_physical_stats(db_id(),object_id('t1'),null,null,'detailed') |

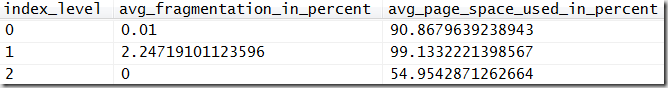

Here’s the result:

Also nothing to worry about, right? Almost no fragmentation. But wait! Why has the index tree a depth of 4? That seems too deep! So let’s see, if an index rebuild will shrink the depth:

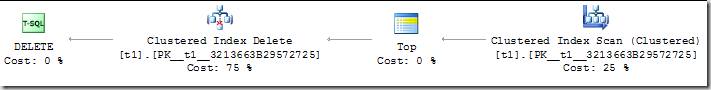

| alter index all on t1 rebuild

go

select index_level, avg_fragmentation_in_percent, avg_page_space_used_in_percent, ''

from sys.dm_db_index_physical_stats(db_id(),object_id('t1'),null,null,'detailed') |

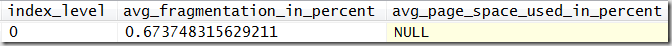

Now the index looks like that:

So, the index three has one level less than before! Therefore, every index seek has to perform one read less – that’s 25% less!

If you look at the avg_space_used_in_percent column, you see why.The non-leaf levels of the index are more packed with data and don’t contain as much space after the rebuild as before.

So be aware, when following the best practices guides in BOL or the internet. Those guidelines are definitely very good and helpful, but may not be sufficient under all circumstances.